METHAD – Toward a MEdical ETHical ADvisor System for Ethical Decisions

Finished Project

This project aims to implement a prototype of an explicit ethical agent, which will serve as an ethical advisory system to support physicians in solving ethical dilemmas arising in common clinical situations during patient care.

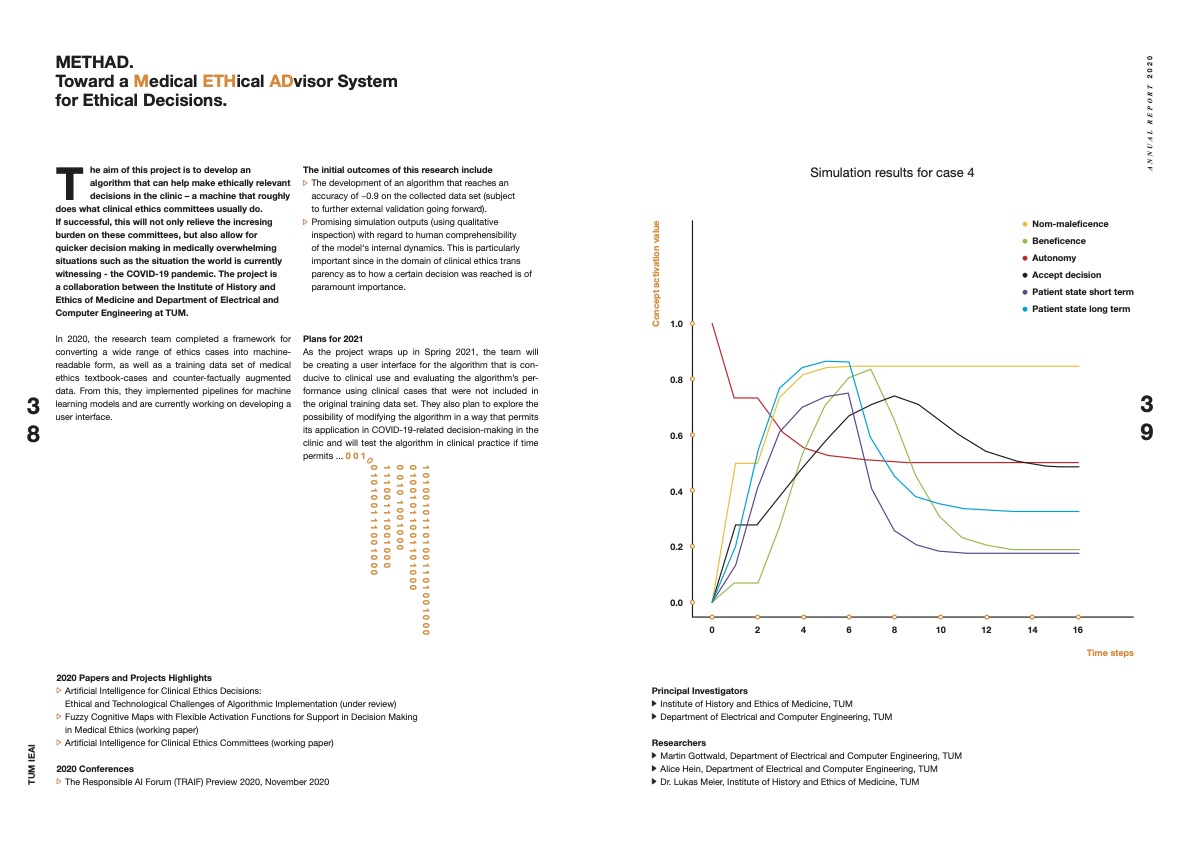

This scenario is intended to serve as a testbed to explore the feasibility of developing explicit ethical agents for practical contexts and the features and capabilities of various machine-learning paradigms towards this goal. The learning paradigms tested include Reinforcement Learning, Inverse Reinforcement Learning, as well as Multiple Intention Inverse Reinforcement Learning. The project uses test cases inspired by realistic situations in a hospital setting where ethical decisions occur for physicians and where there exists a common understanding about the permissible resolutions. The software implementing the ethical advisory system will learn to advise physicians in these situations.

Subsequently, the resulting prototype system will be tested by feeding it with new, but similar cases. We will then evaluate results by comparing them to expert judgments on the permissible resolutions.

Research Output:

Clinical Ethics – To Compute, or Not to Compute?

Algorithms for Ethical Decision-Making in the Clinic: A Proof of Concept

Open Peer Commentaries on Algorithms for Ethical Decision-Making in the Clinic: A Proof of Concept

A Fuzzy-Cognitive-Maps Approach to Decision-Making in Medical Ethics